For years the eLearning industry has categorized custom e-learning into three or more levels of interactivity. The implication also being that that learning effectiveness increases with each higher level of interactivity.

You don’t have to look hard to find them:

> 4 Levels Of Interactivity In eLearning And Its Advantages

> How Long Does it Take to Create Learning?

These kinds of categorizations originated in the vendor community as they sought ways to productize custom development and make it easier for customers to buy standard types of e-learning. I won’t quibble that “Levels of interactivity” has helped simplify the selling/buying process in the past but it’s starting to outlive it’s usefulness. And they are a disservice to intelligent buyers of e-learning. Here’s why:

1. The real purpose is price standardization

Levels of interactivity are usually presented as a way to match e-learning level to learning goals. You’ve seen it–Level 1 basic/rapid is best for information broadcast, level 2 for knowledge development and level 3 and beyond for behaviour change or something to that effect. However, in reality very simple, well designed basic e-learning can change behavior and high interaction e-learning programs can impress while provide no learning impact at all.

If vendors were honest the real purpose of “levels of interactivity” is to standardize pricing into convenient blocks to make e-learning easier to sell and purchase. Each level of e-learning comes with a pre-defined scope that vendors can readily put a price tag on. It’s a perfectly acceptable product positioning strategy, but it’s not going to get you the best solution to resolving your skill or knowledge issue.

2. Interactivity levels artificially cluster e-learning features and in doing so reduce choice

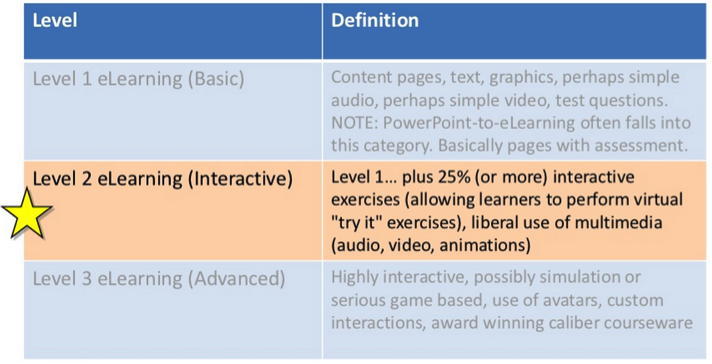

Most definitions of what features are included in each of the “levels” are vague at best. Try this definition from Brandon Hall in wide use:

It’s hard to imagine looser definitions of each level. In fact there are a variety of factors that drive scope and effort (and therefore price) in a custom e-learning program. And they go well beyond “interactivity”. They include interface choices, type and volume of graphics and media, instructional strategies, existence and quality of current content, and yes, the type and complexity of user interactions.

Each of these features has a range of choices within them, but the levels of interactivity approach tends to cluster everything into one bucket and call it a level. It might look something like the following:

A Level 1 program is essentially a template based page turner with a few stock images and interactivity limited to some standard multiple choice self checks. In contrast, a Level III program likely has a custom designed interface, user controls, custom media and graphics, along with with more complex interactions associated with scenarios or gamification. Level 2 is the middle choice most buyers and vendors alike are happy to land on. These media oriented choices, by the way, have little to do with accomplishing a desired learning outcome–but that’s another discussion.

If this artificial clustering of features was ever true, it’s not any longer. Advanced simulations and scenarios can be created with very basic media and user interface features. Advanced custom interface and controls with rich custom media are often used for simple information presentation with very little interactivity. Powerful scenario-based learning can be created with simple levels of interactivity. Rapid e-learning tools once relegated to the Level 1 ghetto, can create advanced e-learning and custom programming can just as easily churn out page turners.

This clustering of features into three groups gives you less choice that you would receive at your local Starbucks. If I’m a customer with a learning objective that is best served by well produced video and animations followed by an on the job application exercise, I’m not sure what level I would be choosing. A good e-learning vendor will discuss and incorporate these options into a price model that matches the customer requirement.

3. It reduces effectiveness and creativity

Forcing solutions into one of three or four options stunts creative thinking and pushed the discussion towards media and interactivity rather than closing a skill gap where it should be.

4. It hurts informed decision making

It appears to create a common language but actually reinforces the myth that there are only three or four types of e-learning. The combinations of media, interactivity and instructional approaches are as varied as the skill and knowledge gaps they are meant to address.

5. It encourages a narrow vision of e-learning

e-Learning has morphed into new forms. Pure e-learning is already in decline being cannibalized by mobile and social learning and the glorious return of performance support. These approaches are much more flexible and nimble at addressing knowledge and skill gaps.

Yes! Thank you. I’ve been singing the same tune since I started in the business and first saw the Army’s IMI levels of interactivity chart. In defense of the tool, the Army had an ambitious scope of strategies to tackle. They needed *something* to quickly estimate and match rubber to road.

Vendors (I used to be one) take this and run with it, coloring the entire effort with the highest revenue brush they can sell. It’s an opportunity crutch that, in my opinion, has hurt the field.

We wrote some recommendations into our SOP to the effect of what you’ve written here. When this was written, I wanted to use less nice verbiage surrounding the usefulness of level of interactivity estimation. Unfortunately, a lot of people used and liked these tables, so we figured a gentle nudge would be best.

This common estimation tool is criticized on page 42 of Appendix E of the document linked here where media selections, fidelity levels, and production assets are conflated with interaction levels. It makes for an obfuscated soup that is billed at the rate of the most expensive ingredient.

http://www.uscg.mil/forcecom/training/docs/training_SOP7_Sep11.pdf

Appendix E, page 34.

Here’s an excerpt. I’d write this differently today…

“Interaction design is a complex art that considers the notion of mental models, metaphors, mapping, affordances, senses, and the user’s environment. Interactions are selected and designed based on the learning objectives or based on the need of the learner. An interaction isn’t a bundled event, commodity, or condiment. Interactions aren’t something that you add in after the content design is complete. Interactivity is the basis of communication. Interactions create a symmetrical experience that breaks the one way presentation model, propel the experience, and provide opportunities for participation.

Interaction selections should not be confused with media selections.

Levels of Interactivity

Many organizations separate interactivity into four levels. These four levels generally describe the complexity of the media and, to a lesser degree, the intensity of engagement through features that appear at each level. These models often and mistakenly include media complexity / quality at various levels. As mentioned above, interaction selections should not be confused with media selections.

A screen count per hour of instruction is commonly associated with each level of the interactivity model. While this is a widely used heuristic and a helpful tool in approximating level of effort for an entire course, it isn’t very helpful for making accurate connections between learning goals and interaction selection.

An estimation based on generalized description of interactivity levels using models similar to the table above is rarely accurate. When used to drive requirements, an arbitrary assumption based on this inaccurate model can lead to misalignment between the goals and the execution of the solution.

For specific interaction choices involving specific tasks, the level or complexity of interactivity should correlate directly to the complexity of the task or sub-task. Strategic design choices that center on meaningful actions should drive the learning experience.

The Interactivity Trap

Technologies are not inherently interactive. Interactivity in multimedia is commonly defined by the complexity of manual interaction that includes a drag, slide, click, or branch. This can frame the design of a Self-Paced eLearning experience around the activity of information transport.

To “make the course more fun or to gain attention and to wake up the learner” a designer may be tempted to dress up the presentation with empty drag, slide, click, or branch gimmicks. Don’t fall into this trap. A meaningful interaction presents the opportunity to participate in a contextual activity that will result in sharpened cognitive skills and an outcome that produces a real skill change. Interactivity provides meaningful practice.”

Steve, “obfuscated soup” might just be the most apt description of the entire approach. Thanks for sharing and the link to your document.

[…] For years the eLearning industry has categorized custom solutions into three or more levels of interactivity– from basic to complex, simple to sophisticated. The implication also being that that … […]

[…] For years the eLearning industry has categorized custom solutions into three or more levels of interactivity– from basic to complex, simple to sophisticated. […]

[…] Gram argues a similar point in Myth of E-Learning Levels of Interaction. It has become common to base estimates of time on a three-level scale of interactivity such as the […]

[…] For years the eLearning industry has categorized custom solutions into three or more levels of interactivity– from basic to complex, simple to sophisticated. The implication also being that that learning effectiveness increases with each higher… […]